The Future of the Central Office

In this period of massive change across the telecom industry, communications service providers (CSPs) are under increasing stress to provide an ever-growing array of services to millions of on-demand subscribers. This includes 4K video (and soon 8K), massive multiplayer online gaming, augmented and virtual reality experiences, artificial intelligence and machine learning applications, and real-time connectivity for Internet of Things (IoT) applications.

As such, the underlying network is being heavily taxed; traditional access can no longer deliver the network’s connectivity needs. Gigabit (and beyond) bandwidth has become ubiquitous, but high speeds alone cannot overcome the significant challenges facing CSPs. Moreover, operators must upgrade to Smart Networks that reduce the operational cost of providing such advanced services to their users.

Today’s telecom data networks, because they evolved from voice-only networks, inherited their hardware-centric architectures. A rigid, complex network centered around the hardware could once provide the necessary level of service, but now faces a paradigm shift as CSPs rearchitect their networks to offer new services and rapidly adapt to changing requirements, primary keys to success today.

The new network must provide a dynamic, scalable approach to network provisioning, especially in the world of 5G, in which applications demand both high bandwidth and low latency. The model has transformed from the traditional, equipment-centric telecom network to a software-defined network (SDN) that is flexible and programmable, with resources that can be deployed and managed on-demand, enabling quick adjustments and scaling to keep up with customer requirements.

The Telco Cloud

A cloud-based approach to telecom networks, and with it Network Function Virtualization (NFV), has been the consensus approach toward the future of networking for nearly 5 years. While large public cloud providers such as Amazon and Microsoft have successfully deployed SDN in their data centers, CSPs are only now seeking a means of distributing compute resources to improve service quality and security while adding agility and scalability.

Therefore, the central office (CO) at the CSP network edge, which serves as the aggregation point for traffic to and from end users, is now the focus for the initial application of this transition. When all traffic is aggregated to the CO, it creates a bottleneck that can cause a variety of problems, especially in an environment that demands 5G and heightened security. Throughput and latency suffer greatly in the traditional access network, essentially cancelling out much of the gain from technologies such as optical line transfer (OLT) and fiber-to-the-home (FTTH).

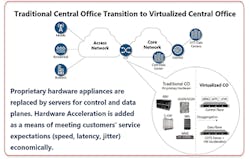

The solution that is being proposed, and in many cases already being implemented, is to deploy a virtualized, distributed network at the edge. OpenNFV and the Central Office Re-Architected as a Datacenter (CORD) project have begun the process of enabling the economies of a data center and the agility of SDN by applying cloud design principles to the CO.

This means a move away from proprietary edge hardware (e.g., edge routers) to bare-metal commercial off-the-shelf (COTS) server arrays in the CO, which promises a more efficient supply chain for the CSP. This enables software to be ported onto any switch OS, bypassing the restrictions of vendor lock-in with proprietary systems and opting instead for open environment servers with NFV and programmable logic.

Because of their proximity to end users, disaggregated virtual COs (vCOs) represent a huge advantage to carriers over centralized data centers when it comes to latency. By replacing edge routers with COTS servers, which offer general purpose compute resources and the ability to run any function, CSPs increase their agility to adopt new services and reduce latency by moving the computing closer to the access points.

vCOs represent a terrific opportunity for operators; they already have vast physical assets at the network edge, giving them a head start on large cloud providers in deploying compute resources there for networking, security, and user applications. Those CSPs that embrace the vCO concept are well-positioned to compete with over-the-top (OTT) providers like Google and Amazon; those who are late to adopt are at risk of being overtaken by non-traditional service providers.

Value-added services, such as streaming video and AR/VR applications, become more realistic for CSPs to offer their customers when virtualization and cloud design is applied to the CO. For example, content distribution network (CDN) video streaming is possible only in an environment in which a geographically dispersed network of bare-metal servers temporarily cache and deliver live, on-demand, high-quality videos to web-connected devices based on the user’s location. Such value-added services are rapidly becoming the primary source of revenue for CSPs.

The Reality Gap

In theory, CO virtualization is a panacea for the most pressing requirements of the network edge. As mentioned, it disaggregates the traffic, overcomes vendor lock-in, reduces latency, enables value-added services for end users, and controls costs.

The reality is that, despite the great benefits of edge virtualization, there are also several challenges.

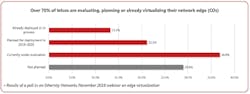

Perhaps most glaringly, the disaggregated network is a work in progress. Research shows that the vast majority of CSPs are either actively working toward or planning CO virtualization; nonetheless, this movement is still in its infancy. There is no question that the advantages will be worthwhile in the end, but a lot of effort is still required to reach that goal.

The other primary concerns for edge virtualization are space and power. Remote locations such as COs were not designed as data centers, so they offer limited physical footprint for the arrays of servers necessary to offer a pure software-based virtualized solution. Similarly, they cannot offer enough power to host the power-hungry virtual platforms.

If COs of the future are to reap the benefits of edge virtualization and disaggregation, they require a more comprehensive solution that can address those issues.

The Solution: FPGA-Based Hardware Acceleration

One approach that CSPs have tried is to add CPU cores to account for the greater demand. Unfortunately, CPUs are simply sub-optimal for data layer processing. They are better left to compute functions. While application-specific integrated circuits (ASICs) may be better suited to network processing than CPUs, they lack programmability and are closed, proprietary systems.

The best way to address the challenges of the virtualized edge is to accelerate the virtualized software to produce efficiencies that overcome the issues discussed above. Hardware acceleration accomplishes more for the same cost; that is, the existing virtualized solution runs more efficiently, and with the same flexibility as software, allowing additional functionality or significant savings on traditional operating expenses. Instead of adding more servers to the network to increase performance, which increases costs and consumes additional space and power, CSPs can accelerate existing hardware and gain similar benefits, while at the same time adding flexibility, furthering scalability, and reducing costs.

InvisiLight® Solution for Deploying Fiber

April 2, 2022Go to Market Faster. Speed up Network Deployment

April 2, 2022Episode 10: Fiber Optic Closure Specs Explained…

April 1, 2022Food for Thought from Our 2022 ICT Visionaries

April 1, 2022Field-Programmable Gate Arrays (FPGAs) for hardware acceleration represent fully programmable hardware, essentially a software-defined solution in a compact silicon chip.

To maximize the benefits of the FPGA, the VNF data layer is offloaded from the CPU. When network and security functions are ported onto the FPGA, fewer CPUs are burned, saving them for the compute functions and user applications for which they were designed. This delivers huge power savings.

FPGA acceleration uses standard, open resources such as data plane development kit (DPDK), reducing CPU load with simplified integration. But the real benefit comes when multiple VNFs are included on a single FPGA or when multiple FPGAs with different VNFs are incorporated into a single server. This multi-access edge compute (MEC) solution reduces the physical need for servers and saves even more power.

Beyond the savings in server cores, physical space, and power, FPGAs are also highly scalable even at high bandwidth. They offer enhanced security, with flow isolation and the option to bypass the CPU entirely for encryption and decryption tasks. They offer the deterministic performance and latency of pipeline architecture together with the flexibility and future-proof nature of software programmability. And they avoid vendor lock-in, as there are multiple vendors with easy porting between them.

In fact, last year, in a Microsoft paper titled "Azure Accelerated Networking: Smart NICs in the Public Cloud", the company strongly recommended against multicore systems and favored the use of FPGAs for networking in a virtualized environment. According to the paper, multicore solutions offer the required programmability, but come at a great cost. Latency, power, and price, are limiting factors with multicore, and these considerations all rise precipitously when they scale beyond 40G, making the solution neither scalable nor futureproof.

FPGAs, on the other hand, offer ASIC-like deterministic performance and the programmability of a software solution, with the low latency, low power, and low price, that the market demands, and they continue to do so at scale.

Like this Article?

Subscribe to ISE magazine and start receiving your FREE monthly copy today!

Parallel Pipeline Architecture Acceleration

For many years, there has been a consensus in the networking world that parallel processing offers a more efficient and more deterministic network performance. Therefore, most ASIC solutions today are based on the idea of a parallel pipeline architecture, which can reduce networking cycles and therefore reduce latency and power.

But ASICs are limited by their inability to adapt to changing requirements and conditions. They cannot be upgraded in the field, but rather must be replaced to be upgraded.

Thanks to FPGA acceleration, it is now possible to use parallel packet processing in a pipeline architecture while also futureproofing the solution with programmability. Dedicated network processing functions can be designed in a parallel pipeline architecture on low-cost FPGAs to offer a compact solution that provides scalable, deterministic performance, with low latency, low power, and easy upgradability.

Conclusion

The next-generation CO relies heavily on edge virtualization and disaggregation of network traffic and functions in order to relieve bottlenecks, as well as to bring value-added services to the network edge. In so doing, CSPs can avoid vendor lock-in and control costs. This gives operators their best chance at competing with OTT service providers.

To overcome the space, power, and scalability challenges of a vCO, an SDN solution combined with programmable FPGA-based parallel pipeline architecture acceleration addresses these issues while further optimizing the network edge, producing the most efficient deployment for the era of 5G in our smart, connected world.

About the Author