8 Collaborative Cloud Edge Strategies —

As service providers and enterprises rethink what the edge means for their infrastructure, it is clear that it will take a village to deliver optimized 5G applications over optimized networks. Although network equipment providers offer end-to-end 5G solutions, there’s a strong trend toward disaggregating the network and relying on focused, best-of-breed solution providers to deliver architectural components. Let us take a look at what this means for network service providers.

Edge computing is a key trend that rises to the forefront with the advent of 5G networking. But edge computing is not a new idea. The edge already exists in many mobile networks, where edge-based content-delivery network (CDN) servers hosting popular streaming media content from sites like Netflix and YouTube help reduce both latency and bandwidth consumption. However, the edge becomes much more complex and critical with 5G. (See Figure 1.)

Figure 1.

To achieve ultra-reliable low latency support for latency-sensitive services such as industrial automation or autonomous vehicles, the edge is essential. The platform’s location, hardware and software architecture, and ease of integration, are all critical factors in the business outcome of the 5G network. After all, if building out the edge is financially impractical or technically problematic, progress will be slow indeed.

The 5G RAN is being divided into multiple components — the Centralized Unit (CU), the Distributed Unit (DU) and the Radio Unit (RU) — running different parts in different locations, including the edge. The good news is that several organizations are active in promoting open-source, disaggregated architecture, including the ORAN Alliance, Open Networking Foundation (ONF), Telecom Infra Project (TIP), Linux Foundation (via CNTT) and Open Compute Project (OCP). A whole ecosystem is necessary to build a successful, cost-effective 5G edge.

Edge Architecture

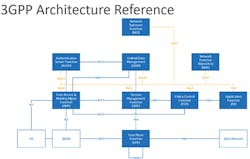

3GPP Release 14 specifies an architecture that allows control and user plane separation (CUPS) in 4G LTE EPCs, where components like the serving gateway (SGW), packet data network gateway (PGW) and traffic detection function (TDF) can be split into the control plane elements (SGW-C, PGW-C, TDF-C) and the user plane function (UPF) elements (SGW-U, PGW-U, TDF-U) that process data packets. (See Figure 2.) In the migration to 5G, UPFs that perform the bulk of the work will need to be hosted at the edge to achieve the sub-10ms, sub-5ms, and, perhaps eventually, sub-1ms, latencies that 5G promises.

Figure 2.

The hosting of the near real-time logic that controls the RAN intelligent controller (RIC) is an excellent example. ORAN Alliance and ONF’s software architecture for the open RAN envisions a series of collaborating applications (xApps) that run at the edge and perform near real-time control. This approach allows for spectrum management and improved beam forming within a potentially open ecosystem for a plug-and-play approach. It is more than likely that these xApps and RIC have to be hosted at the edge to enable the near real-time controls needed to react rapidly to changing mobile network conditions.

In addition, the edge architecture needs to be flexible. We can’t always predict the type of innovation or the applications that come to define 5G. Without the full understanding of what is needed under the hood in the infrastructure, anything built today needs to have ample capacity and flexibility to adapt to market demand and new standards as they emerge.

8 Steps to the Edge: Planning Considerations

Service providers exploring edge architectures need to contemplate how to best adapt — not replicate — the standard data center architectures they use today.

Here are 8 guiding principles for planning an edge solution.

1. Ensure space and power efficiency.

It is important to emphasize edge location challenges. Some edges are like small data centers with power and space constraints, while others, like cell sites or street cabinets, face environmental challenges including heat, dust, and moisture.

2. Lay a foundation of security.

Recognize that edge locations are not as secure as data centers, and servers may not be as locked down. If servers are stolen or attacked, sensitive data should not be exposed. Encryption has to be applied liberally and key management is important. For truly sensitive data, service providers should consider tamper detection with data destruction and physical lockdowns or GPS tracking of expensive assets with movement or breach alerts.

3. Maintain robustness of data and application.

In edge locations, processed insights or important raw data needs to be periodically sent upstream (to the extent feasible) or offloaded into more permanent storage. Likewise, applications should be designed to work for extended periods of time if servers or storage devices fail, running critical functions when in degraded mode until someone can reach the location to swap devices. This likely means redundancy without excess investment in remote hardware.

4. Design for low platform overhead.

Unlike in a data center with huge amounts of available resources, every compute cycle at the edge is valuable. The hardware and software platform supporting applications needs to take as little overhead as possible, which is why edge architectures favor bare metal or containers over virtual machines.

5. Be open to diverse hardware options.

In keeping with the last point, general purpose CPUs might not always be the best answer at the edge. More diverse hardware like FPGAs, GPUs, SmartNICs, AI chips (e.g., Google’s TPU), and DPUs or specialized ASICs, may be a better fit for the edge compute problem at hand. These can sometimes provide better performance per watt or per unit space than CPUs, though they may have less flexibility in the type of computation they can perform. Balancing flexibility against other edge constraints is a key part of the architectural decision.

6. Collaborative design with central clouds.

Recognize that the edge is seldom a standalone system. Many workloads consist of components in central clouds and at the edge working in concert. Compute and storage at the edge is inevitably going to be more expensive, and depending on data set sizes and the cost of upstream network transport, applications probably process data both at the edge and in the cloud, subject to any data jurisdiction and privacy constraints.

7. Maintain flexibility and agility to accommodate new services.

As there is not yet a full picture of all the services that run at the edge, architectural designs and models built now should accommodate new services. Likewise, hardware should provide flexibility in the classes of computing, networking, and storage devices, configured for an edge stack.

8. Ensure architectural and scaling headroom.

Edge stacks need to accommodate workload sizes that could be larger than the demand today. Even workloads like virtualized and disaggregated RAN require substantial support on the DU and RU components, and 5G UPFs could be called on to manipulate packets for more complex tasks than GTP encapsulation and de-encapsulation.

As part of a 5G build-out, service providers should implement a flexible framework for 5G RAN and the UPFs.

InvisiLight® Solution for Deploying Fiber

April 2, 2022Go to Market Faster. Speed up Network Deployment

April 2, 2022Episode 10: Fiber Optic Closure Specs Explained…

April 1, 2022Food for Thought from Our 2022 ICT Visionaries

April 1, 2022Getting Started

Service providers will find a growing number of vendors of open RAN systems. For those uncomfortable with sourcing from new vendors or building out these new architectures, system integrators can ease the pain: they can perform the integration, and buffer the service provider from dealing with the uncertainties of new startup vendors. Likewise, service providers can encourage their incumbent equipment providers to forge OEM or resale relationships with 5G infrastructure startups, thereby insulating the service providers from the risks and rough edges that startups may have.

Edge data centers are an essential part of 5G infrastructure, but it takes ecosystem thinking to optimize them. Service providers should consider costs, functionality, interoperability, and scalability, when planning to deploy them. For the best outcomes, it takes a village of ecosystem partners to provide ideal solutions. For this reason, 31 partners have joined to strengthen the IBM Telco Cloud.

The success of 5G is dependent on the edge playing the role of host, gathering components of the virtualized and disaggregated RAN stack needed to ensure low-latency processing of mobile traffic.

References and Notes

IBM Telco Network Cloud Manager, https://www.ibm.com/cloud/telco-network-cloud-manager?p1=Search&p4=43700058326181408&p5=b&cm_mmc=Search_Google-_-1S_1S-_-WW_NA-_-%2Btelco%20%2Bcloud%20%2Bibm_b&cm_mmca7=71700000060814052&cm_mmca8=aud-309367918490:kwd-993850000709&cm_mmca9=CjwKCAiAiML-BRAAEiwAuWVggjqV9ddVHisvQTSzhR1-QFlrJrUNN81anQREKf2_9JGoBqCDsx0_8BoC1EkQAvD_BwE&cm_mmca10=475861025008&cm_mmca11=b&gclid=CjwKCAiAiML-BRAAEiwAuWVggjqV9ddVHisvQTSzhR1-QFlrJrUNN81anQREKf2_9JGoBqCDsx0_8BoC1EkQAvD_BwE&gclsrc=aw.ds